Readings about load balancing

Load Balancing

CloudFlare Unimog

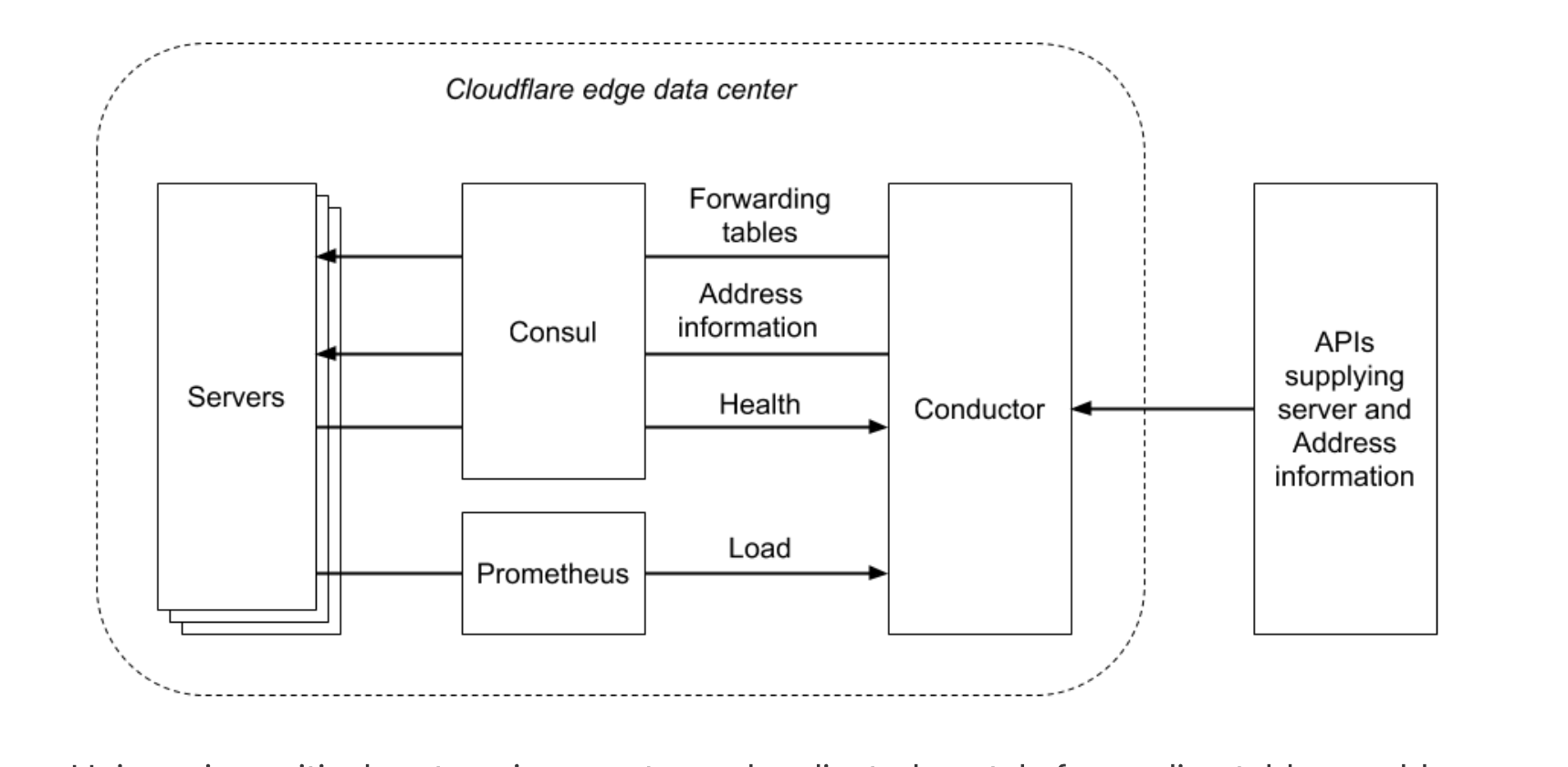

Starting from eBPF use case, saw this CloudFlare Unimog Blog, a L4 TCP load balancer. Unimog solves very similar issue as I did in ClickHouse.

It starts of by introducing background, VIP/AnyCast/DataCenter routing. But still insufficient for load balancing within DC.

Similarity

It’s also not enough to give each server a fixed share of connections based on static estimates of their capacity. Not all connections consume the same amount of CPU. And there are other activities running on our servers and consuming CPU that are not directly driven by connections from clients. So in order to accurately balance load across servers, Unimog does dynamic load balancing: it takes regular measurements of the load on each of our servers, and uses a control loop that increases or decreases the number of connections going to each server so that their loads converge to an appropriate value.

Solution of using Prometheus as CPU utilization is also very similar

Differences: use the XDP eBPF instead of Envoy / Istio (they already use eBPF); they deploye load balancer on each node for resilence and being operated on edge commodity server.

Inspiration and knowledge, what eBPF can do (XDP programs are right after NIC received the packet even before TCP stack in kernel starts processing this, becomes quick); Use difference of service load as effectiveness indicator.

Other Load Balancer

- Google Maglev, L4 LB, by pass Linux kernel networking, to save computation cost, (DPDK similar idea for faster network stack itereation); focus on connection evenness rather than CPU load; consistent hash for session stickiness. Maglev can be on the data path or not (backup mode); Reading it now does not feel complicated anymore;

- Facebook Fatran, XDP program for TCP l4.

- Github GLB, what Unimog getting many of its idea from.